Neural network supercharges model’s ability to predict phase transitions

Place a pot of water on the stove and light the fire. Once the temperature in the pot reaches 100º C or so, the water will boil to vapour. This is an example of a phase transition that occurs every day in our houses. Yet scientists have difficulty predicting whether a bunch of water molecules, like in the pot, will be liquid or gaseous in a given set of conditions.

This is different from your everyday experience with the pot on the stove and has to do with the model a computer can simulate to predict the phase of a group of interacting particles. Models that can make these predictions efficiently are prized in the study of wet surfaces, porous materials, microfluidics, and biological cells. They can also reveal ‘hidden’ phenomena we may not notice at the macroscopic level, i.e. just by looking at the water boil, and which scientists can use to make sense of other things and/or come up with new applications.

Remember your high school practicals notebook? For each experiment, you had to spell out sections called “given”, “to find”, “apparatus”, “methods”, and “results”. A model is an “apparatus” — a computer program — that uses the “given” (some input data) and certain “methods” (model parameters) to generate “results”. For example, the model below shows how a fluid with certain properties, like air, flowing around a spherical obstacle in its path, like a big rock, leads to the formation of vortices.

A popular “method” that models use to predict a phase transition is called classical density functional theory (DFT). Say there are a bunch of particles in a container. These particles can be the atoms of air, molecules of water, whatever the smallest unit of the substance is that you’re studying. Every three-dimensional distribution of these particles has a quantity called the free-energy functional associated with it. (Functionals and functions are the same thing except functionals can also accept functions as inputs.) The free-energy functional calculates the total free energy of a system based on how the density of its particles is distributed in three dimensions.

Classical DFT is a way to find the equilibrium state of a system — when it’s reached a stable state where its macroscopic properties don’t change and it doesn’t exchange energy with its surroundings — by minimising the system’s free energy.

A model can thus simulate a group of particles in a container, varying their distribution until it finds the one with the lowest free-energy functional, and thus the conditions in which the system is at its lowest energy. “Once [the free-energy functional] is specified, consistent and complete investigation of a wide variety of properties can be made,” the authors of a paper published in the journal Physical Review X on January 24 wrote.

While this sounds simple, the problem is that determining the free-energy functional becomes more difficult the more particles there are. And only once the functional has been determined can the model check when its value is lowest. This is why a model using classical DFT to determine the properties of a liquid at specific temperature and pressure, say, will struggle.

In the January 24 study in Physical Review X, scientists from the University of Bayreuth and the University of Bristol made an advance in this process when they replaced the free-energy functional with a neural network that had been trained on simulations of particles-in-a-container in a variety of conditions (e.g. changing the pressure and temperature across a range of values), then used it to model a realistic fluid.

From the abstract of the paper:

Local learning of the one-body direct correlation functional is based on Monte Carlo simulations of inhomogeneous systems with randomized thermodynamic conditions, randomized planar shapes of the external potential, and randomized box sizes.

Monte Carlo simulations are quite cool. You set up a computer to simulate, say, a poker game with five players. As the game progresses, at some point in the game you ask the computer to take a snapshot of the game and save it. This snapshot has information about each player’s cards, what decisions they made in the previous round (fold, call or raise), the stakes, and the cards on the table. Once the game ends, you rerun the simulation, each time freshly randomising the cards handed out to the players. Then again at some point during the game, the computer takes a snapshot and saves it.

Once the computer has done this a few thousand times, you collect all the snapshots and share them with someone who doesn’t know poker. Based on understanding just the snapshots, they can learn how the game works. The more snapshots there are, the finer their understanding will be. Very simply speaking this is how a Monte Carlo simulation operates.

The researchers generated data for the neural network to train on by running around 900 Monte Carlo simulations of “inhomogeneous systems with randomized thermodynamic conditions [including temperature], randomized planar shapes of the external potential, and randomized box sizes”. (The external potential refers to some energy field applied across the system, giving each of the particles inside some potential energy.) Then they used their classical DFT model with the “neural functional” to study a truncated Lennard-Jones system.

Scientists have previously combined machine-learning with classical DFT models to study particles moving randomly, interacting with each other only when they collide. Actual, real fluids aren’t so simple, however. Instead, their behaviour is more closely modelled as a Lennard-Jones system: the particles in a container repel each other at very short distances, are attracted to each other across intermediate distances, and at larger distances don’t have an effect on each other. As the researchers wrote in their paper:

… understanding the physics in such a simple model, which encompasses both repulsive and attractive interparticle interactions, provides a basis for understanding the occurrence of the same phenomena that arise in more complex fluids .

They also added that:

… recent investigations did not address the fundamental issue of how the presence of a phase transition might be accounted for within the framework of a neural density functional.

So they set about studying a truncated Lennard-Jones system with a phase transition. Their model started with predicting how the particles are distributed, the overall system’s thermodynamic properties, the conditions in which liquid and gaseous phases coexist in the container, and the particles’ behaviour at interfaces, like evaporating from the surface of a hard wall. Then, the researchers wrote:

… we focus on the liquid-gas transition which is a basic manifestation of the presence of interparticle attraction and seek to assess whether the neural functional can describe (i) phase coexistence and the approach to the associated critical point, (ii) surface tension and density profiles of the liquid-gas interface, (iii) drying and capillary evaporation transitions that occur at subcritical temperatures, and (iv) how accurately the approach performs for both bulk and interfacial properties.

(Emphasis in the original.)

So could the neural functional describe i-iv?

The answer is emphatically yes.

In fact, the model was able to accurately predict phase transitions even when it was trained only on supercritical states — i.e. when the container contains both liquid and gaseous states. The researchers singled this ability of the model out for especial praise, calling it “one of the most striking results”.

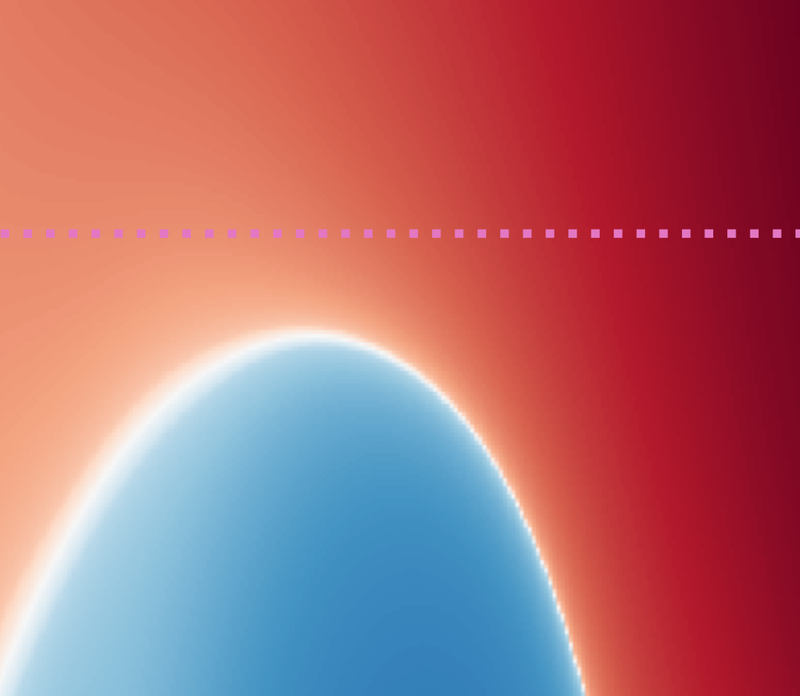

This plot, generated by the model, shows the states of a truncated Lennard-Jones fluid with density on the x-axis and temperature on the y-axis. In the red areas, the substance — the collection of particles in the box — is either liquid or gaseous. In the blue areas, the liquid and gaseous phases become separated. The intensity of the colour denotes the substance’s bulk modulus, i.e. how much it resists being compressed at a fixed temperature, from dark blue at the lower end to dark red at the upper.

Overall, the researchers wrote their “neural functional approach” is distinguished by the fact that “the range of phenomena and results it can describe … far exceed the information provided during training.” They attribute this ability to the information contained in a “single numerical object” that the neural network was tuned to track: 𝑐1(𝐫;[𝜌],𝑇), a.k.a. the one-body direct correlation functional. It’s a functional that describes the variation of the density of particles inside the container in response to the external potential. As they put it:

Inputting only Monte Carlo training data of one-body profiles in planar geometry and then examining 𝑐1(𝐫;[𝜌],𝑇) through the functional lens provides access to quantities which could not be obtained directly from the input data. Indeed, determining these usually requires advanced simulation techniques.

They added their method also required fewer computational resources than a classical DFT setup operating without a neural functional in order to achieve “comparable” accuracy. On the back of this resounding success, the researchers plan to use their model to study interactions in water and colloidal gels. They also wrote that they expect their findings will help solve problems in computational chemistry and condensed matter physics.